Draw a Circle on a Tif

Earlier I dive into the topic of alpha aqueduct masking in ffmpeg, I should explain what this document is. I'yard writing information technology as a blog mail nevertheless, I want it to document what I know about how blastoff masking is accomplished in FFMPEG.

Alert: Video examples in this post use VP9 with an Blastoff channel. At the time of writing, but Firefox and Chrome based browsers will support the content. Border users (as of Apr 12, 2020) volition see the video but will not run across the blastoff aqueduct. Safari users will need to convert the videos to H.265 somehow to get the equivalent result.

Basics of Alpha Channel

When we want something that we are filming or recording to be transparent, the technology I think we employ most frequently is a affair called chroma keying. Yous will probably have seen stages or sets with large swathes of dark-green or blue fabric draped all over the place dyed to a specific colour such that computers tin can be programmed to recognize that colour in video and automagically strip it from the recording. This is what lets visual furnishings guys make Superman fly, or Ten-Wings aught through a Decease Star trench run. Since we cannot literally make the world transparent, the chroma keying is a clever hack to pretend that it is.

Digital media is dissimilar though, since pretend pixels don't have any basis in reality we can just specify certain areas of images as transparent without worrying well-nigh matching colours to an capricious "this should be invisible" palette.

The degree to how much whatever given pixel should be transparent is indicated past information technology'southward blastoff channel. Typically, in most digital imagery we use an eight-chip alpha channel (256 shades of transparent). Alpha blending is one of the nearly simple and widely used mechanisms for compositing digital imagery, and information technology describes the process of combining translucent foreground images with opaque background images.

Support for blastoff channels in many media codecs is limited because of historical implementation decisions. For instance, the ubiquitous jpeg format has no internal back up for alpha transparency whatsoever, nor does h.264 (often called mp4 which is really just a cardbox box you can shove movies into). Some digital tools will implement chroma keying instead of alpha blending considering of technical limitations of these common data codecs.

Many codecs don't include alpha channel support since the imagery the designers envisioned encoding with those codecs doesn't accept any transparency in them. For instance, consider any digital camera, since the real earth that the camera is recording is not transparent, the format that the camera volition save its image too has no need to back up an alpha channel layer. Whichever photons arrive at the photographic camera are the ones that colour and shade the image.

In digital video, the modern codecs that I know back up an alpha aqueduct are VP9 from Google, Prores 4444 and h265 from Apple (and others). Support for these codecs is rather mixed when I am writing these words. Moreover the Prores variant is not intended for digital distribution, it's a format intended for storing loftier quality intermediary or raw files for editing.

Clear then far? Possibly, only this discussion of codecs isn't done notwithstanding. We take compatibility problems to sort out still.

Digital Cardbox Boxes

Information technology is fourth dimension to talk near containers. I used to get very confused why some videos I would download from the internet that had a familiar file extension would play on my figurer and some would not, despite sharing the aforementioned familiar file extension.

I originally encountered this with the AVI file extension, some videos would play and others would complain virtually something to practice with codecs? Well it turns out that videos stored every bit AVI aren't actually a stock-still format, rather information technology was a storage container where people could put a variety of sound or video content encoded with dissimilar technologies. My computer merely did non have the software installed to understand all the various types of encoded video that it was encountering. So despite the fact my reckoner could open and sympathise an AVI file, it did non understand the particular language with which the contents were written.

This problem is even more complicated today than it was in 1992 when I first started having these issues.

In that location are LOTS of different container formats these days (.mov, .mp4, .mkv, .webm, .flv and many others). Most containers can shop a massive variety of different video and sound formats. For instance the prolific h.264 format, can easily be stored in virtually of the containers listed to a higher place. Software support for all the various containers is extremely mixed. Even worse is the back up for the actual formats themselves.

Alpha channel support in video codecs

Ok and then lets merely imagine you are a mere mortal and desire to create an animated video clip with an blastoff aqueduct, what are you options today (April, 2020)?

I remember information technology'southward right to say that at that place are at least 3 codec categories:

- Capture

- Intermediate

- Distribution

A capture format would be similar the raw video from a digital photographic camera or an animation projection format in something like blender or other animation suite. Intermediate formats (similar ProRes) are great for editing video clips because their internal storage layout allows tools to quickly decompress and scrub through their contents without causing actress load on the computer at the cost of increased storage requirements. Distribution codecs (similar h.264, vp8 and vp9), exercise a great chore at compressing the video stream, at the price of requiring actress computation time to render each individual frame.

Intermediate codecs with alpha channel support

| Format | ffmpeg | Resolve | FinalCut | Premier |

|---|---|---|---|---|

| ProRes4444 | Full | Partial | Full | Full |

| Cineform | Decode | Full | ? | ? |

| DNxHR | No Alpha | Full | ? | ? |

| QTRLE | Full | None | Full | ? |

| TIFF | Full | Full | Total | Full |

Alarm: ProRes4444 consign from Davinci Resolve is merely supported on linux and Mac platforms (at time of writing, April 2020).

ProRes4444 is probably the well-nigh widely supported intermediate stage codec, but since I cannot export ProRes4444 files from my Windows Davinci Resolve suite, using it requires some extra attempt.

Actually, and this really surprised me, the best supported cantankerous-platform fashion of storing intermediate video files for animation is to really not employ a video format at all and instead just save a big drove of compressed TIFF images for each rendered frame and but pretend it's a video like you may have done with children's animated flip books as a youth.

Distribution codecs with blastoff channel back up

| Format | ffmpeg | Resolve |

|---|---|---|

| HEVC H.265 | No Alpha | Decode |

| VP8 | Full | None |

| VP9 | Full | None |

H.265 is the upcoming replacement to H.264 and does back up an alpha channel in its specification but toolchain support for that engineering science is actually only available on Apple tree platforms when I am writing these words.

Alarm: H.264 does NOT support blastoff channel and is non included hither.

Annotation: ffmpeg does actually have an h.265 encoder via a third political party library just now it does not support blastoff channel to my cognition, though I would be happy to be proven wrong about this.

Currently there is no widely available distribution type codec that supports an alpha channel that works across all devices but VP9 does have a massive adoption base of operations from all contempo versions of Chrome and Firefox. Presumably, since Edge already supports VP9 information technology will add alpha aqueduct support at some point in the future, merely today it does not support VP9 alpha blending.

Codec Choices Summary

As best I can tell from experimentation and researching throughout the public Net, ProRes4444 has the most toolchain support beyond common platforms. However, using sequences of compressed TIFF images is completely supported in every editing tool and is probably the best way to store intermediate video content for after editing if maintaining an Blastoff Channel is important

For archiving and web purposes VP9 can be used in every modern version of Firefox and Chrome, and has official (but not arranged by default) back up from Microsoft. Apple users can apply H.265 but toolchain support for creating those videos with Alpha Channel is very thin at present.

Practical Examples of Alpha Channel Video

So far nosotros've identified a few codecs and technologies we tin utilise to go on track of transparency in videos. Lets accept a look at how the ffmpeg tool is used practically to create animated video content with an blastoff aqueduct. Then we will dive into animative and masking existing videos with ffmpeg.

Using FFMPEG to Encode VP9 with Alpha Channel

Ok lets just assume you accept already done the work to create an animation sequence of TIFF images that you would like to transform into a VP9 file for distribution onto the web.

The mutual format for storing VP9 video is in the webm container and you can instruct ffmpeg to create a rough video with instructions like the post-obit:

ffmpeg -i timer%04d.tif -r 30 -c:5 vp9 -pix_fmt yuva420p timer.webm | Name | Required | Clarification |

|---|---|---|

| i | Required | Input file(southward), %04d is a four digit design |

| r | Optional | Frame Rate, default is 25 if unspecified |

| c:v | Required | Codec, VP9 hither supports Alpha channel |

| pix_fmt | Required | yuva420p is required to support alpha channel |

Yous could cull to run 2 passes on the source material to create a slightly college quality terminal product similar this (on Windows):

ffmpeg -r 30 -i timer%04d.tif -c:five vp9 -laissez passer 1 -pix_fmt yuva420p -f webm NUL ffmpeg -r 30 -i timer%04d.tif -c:v vp9 -pass 2 -pix_fmt yuva420p timer.webm Using FFMPEG to Encode ProRes4444 with Alpha Channel

Since ProRes enjoys wide support across most video toolchains, here is a command to create a ProRes4444 video clip from a sequence of TIFF images with alpha aqueduct enabled.

ffmpeg -i timer%04d.tif -r 30 -c:v prores_ks -pix_fmt yuva444p10le prores.mov | Name | Required | Clarification |

|---|---|---|

| i | Required | Input file(due south), %04d is a iv digit design |

| r | Optional | Frame Charge per unit, default is 25 if unspecified |

| c:v | Required | Codec, prores_ks hither supports Alpha aqueduct |

| pix_fmt | Required | yuva444p10le needed for ProRes alpha channel |

Note: In my testing, using a sequence of TIF images actually takes up less space and is college overall quality than transcoding to ProRes. Testing was not rigorous though and so take this feedback with a grain of common salt.

Using FFMPEG to Preprocess Video for Editing

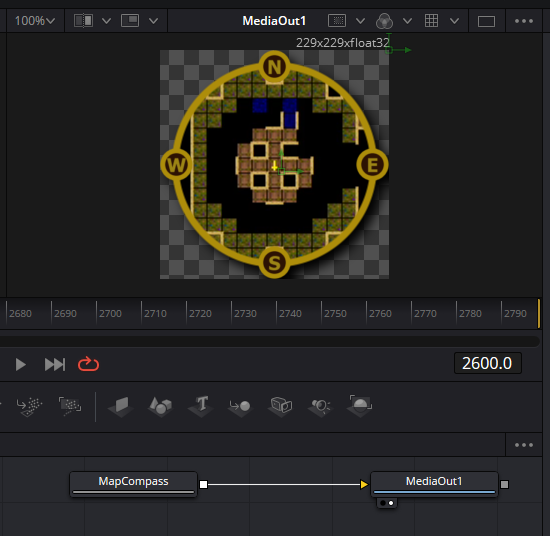

For the rest of this commodity I'm going to walk through the procedure I will use to recreate a video clip that looks like this:

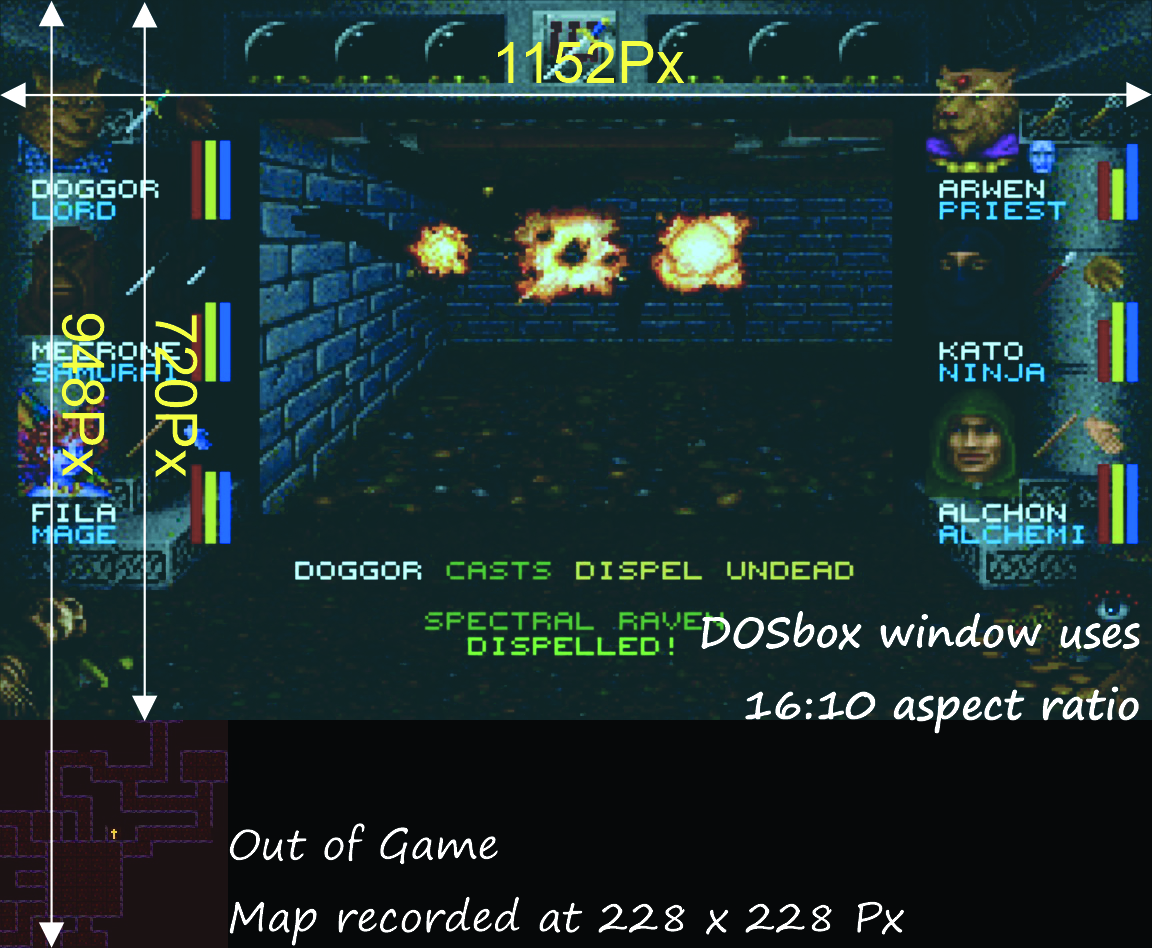

As you may or may not know as function of a hobby and wish to develop a new prepare of skills I have been recording gameplay footage of an old timey DOS game called Wizardry seven: Crusaders of the Nighttime Savant. I played the game for years as a immature lad and figured I would like to share the game with the world since it was then important to my adventures growing up. The game normally plays in a iv:3 DOSBox window and with some gentle massaging I have stretched to a sixteen:ix aspect ratio and overlayed an out of game map into the video.

All well and good, what does this have to do with ffmpeg?

Information technology turns out that my erstwhile workstation and the handy Davinci Resolve suite don't really become along. It crashes all the time sadly, despite using updated drivers, magical fairy dust, and ground up chili peppers. I resorted to using ffmpeg to preprocess a number of video scenes so that Resolve has fewer things to complain (crash) about.

Particularly, the map element you can run across in that image above does non exist in the original game, I am overlaying information technology ontop of the video when advisable. Moreover information technology is existence recorded from an out of game mapping tool and encoded into the aforementioned video stream equally the game recording.

Here is where ffmpeg comes into play for my workflow. First thing, I need to crop and resize the gameplay and map into distinct video files. This is where ffmpeg really shines since it allows you to created complex video processing schemes and chain together all sorts of operations.

I am not going to walk pace by pace through the mechanisms that ffmpeg uses to crop video content here, this has been covered elsewhere sufficiently well that I don't think it's worth trying to recreate that authors guidance here.

With feedback from the above article I created a fancy batch script to preprocess my video content, lets take a look at information technology:

gear up "FG=[0]split[gp][map];" ready "FG= %FG% [gp]crop=1152:720:0:0,scale=1280:720,setsar=i:ane[gps];" gear up "FG= %FG% [map]crop=228:228:0:720[croppedmap];" ready "FG= %FG% [0:1]highpass=f=200,lowpass=f=3000[gpaudio]" ::echo %FG% for %%f in (*.mkv) practise ( echo %%~nf ffmpeg -i " %%~nf .mkv" ^ -filter_complex " %FG% " ^ -map "[gps]" -map "[gpaudio]" -map 0:2 "gp %%~nf .mp4" ^ -map "[croppedmap]" "map %%~nf .mp4" ) Substantially what is happening here is this, for every .mkv file in the directory, I am cropping the map and gameplay video to distinct video streams. Before saving the streams I am passing the gameplay sound through a highpass filter to remove some depression-frequency sound artifacts caused by the emulation of the MPU401 audio device.

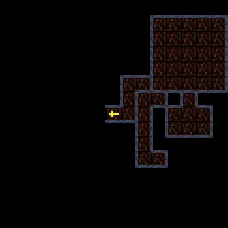

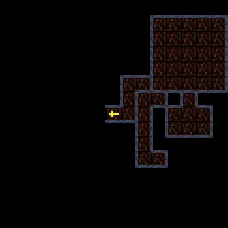

Running the script as is, results in cosmos of two new MP4 video files, the first being the gameplay video combining the audio runway from the reckoner and my microphone. The 2d video file just contains the video of the cropped map which in plow looks merely like this

This is certainly a start but I would like to recreate the aforementioned blazon of issue that I was able to generate in Davinci Fusion. The entire point of the first 1000 words of this article was to introduce the fundamentals required to create an blastoff masked video chemical element that can be imported easily with its alpha aqueduct intact into my editor of option.

Alpha Manipulation with FFMPEG

As I am writing these words I don't actually know how to go almost what I am trying to do here but lets take a look at how ffmpeg handles drawing a canvas. We'll give ourselves a simple goal at first, lets fix an entire video to 20% transparency and export it equally a VP9.

ffmpeg -f lavfi -i color=color=red@.2:size=228x228,format=yuva420p ^ -t 20 -c:v vp9 redcanvas20.webm That wasn't then hard, but lets take a await at the command.

- Apply

-f lavfias input format to the libavfilter virtual device - Set the filter input source using the

-iflag (non-vf) - Provide arguments as consummate key-value pairs, similar:

color=color=red - Select the

yuva420ppixel format for compatibility with vp9 alpha export.

Notation: If you are planning to export to ProRes4444, be sure to use the yuva444p10le pixel format instead.

Ok so allow's try something a little more complicated, lets draw a diagonal line and make the remainder of our generated video prune transparent. We use the geq filter to practise this because it allows u.s.a. to directly manipulate the alpha level of every pixel directly using an expression. I will drib the @.2 from the color filter as well since it won't be needed subsequently this.

ffmpeg -f lavfi -i colour=color=blood-red:size=228x228,format=yuva420p,^ geq=lum='p(10,Y)':a='if(eq(X,Y),255,0)' ^ -t 20 -c:v vp9 redline20.webm Notation: Before I go along I should mention that I don't have whatever before experience with the geq filter and similar advanced ffmpeg filtering techniques. All the examples you lot are reading here are created from snippets I take found left in old emails and user questions on the web combined with a snippet of late dark coffee and figuring it out for myselfedness.

Lets disect the geq filter written to a higher place:

geq=lum='p(X,Y)':a='if(eq(10,Y),255,0)' |----------| |-------------------| | | Luminance Blastoff Expression As best I can tell, every geq filter command requires a luminance expression. The p(X,Y) expression here returns the current luminance value for each pixel and sets it to itself. Since we need some expression hither, setting the image to its source values is preferred.

The 2d part of the filter is the optional alpha aqueduct expression, where we manually calculate the desired alpha channel for every pixel. In our example here if(eq(10,Y),255,0) is our expression, which says roughly if the X and Y coordinates are equal, set the electric current pixels alpha aqueduct to 255, otherwise set it to 0. What results is a directly diagonal line that your grade school algebra teacher taught you all about in grade vii. Try it for yourself, and fiddle with the expression, you lot can generate any bending you want if you remember the old gradient formula:

Evaluating FFMPEG Expressions

Cartoon the line was pretty straight forward, but how did I know to utilize the if(eq(Ten,Y),255,0)? Well truthfully I saw an example of this on some former mailing list archive, simply it dawned on me that ffmpeg has a large drove of expressions that nosotros tin use.

The expression documentation on the ffmpeg website lists a total ready of expressions that we can use when designing our formulas. But I volition share a handful that catch my heart:

| Expression | Clarification |

|---|---|

| if(A, B, C) | If \(A \neq 0\) return \(B\), otherwise return \(C\) |

| gte(A, B) | Render \(i\) if \(A \geq B\) |

| lte(A, B) | Return \(i\) if \(A \leq B\) |

| hypot(A, B) | Returns \(\sqrt{A^2 + B^2}\) |

| circular(expr) | Rounds expr to the nearest integer |

| st(var, expr) | Salve value of expr in var from \(\{0, \dots, 9\}\) |

| ld(var) | Returns value of internal variable var saved with st |

In that location are also a number of predefined variables:

| Variable | Description |

|---|---|

| N | The sequential number of the filtered frame, starting from 0 |

| X, Y | Coordinates of the currently evaluated pixel |

| Due west, H | The width and meridian of the image |

| T | Fourth dimension of the current frame, expressed in seconds. |

Cartoon shapes in FFMPEG with Alpha!

Alright so lets practice a chip with the geq filter and see if nosotros tin create commands that can draw some simple shapes and patterns.

Set lower third of video opaque:

ffmpeg -f lavfi -i colour=color=red:size=228x228,format=yuva420p,^ geq=lum='p(X,Y)':a='if(gte(Y,two*H/3),255,0)' ^ -t 20 -c:v vp9 redlowerthird20.webm This might catch you by surprise but look at the expression gte(Y,two*H/iii) or \(Y \geq \frac{2}{iii}H\). Information technology may seem odd that setting the lower third of the video to opaque is washed by filtering for pixels with a \(Y\) coordinate greater than \(\frac{ii}{three}\) of \(H\). The reason for this is that the top left of the image is really \((0,0)\) and the \(Y\) coordinate goes upwards as nosotros movement towards the bottom of the epitome. There is surely some sort of official name for this merely you need to become familiar with working in this mirrored positive coordinate infinite.

Lets endeavor another, lets fill in the top right diagonal with opaque pixels:

ffmpeg -f lavfi -i color=color=red:size=228x228,format=yuva420p,^ geq=lum='p(X,Y)':a='if(lte(Y,X),255,0)' ^ -t twenty -c:five vp9 redtopdiagonal20.webm Now, pixels where \(Y \leq 10\) are transparent and others are opaque which gives this pattern you can see here where the lesser left diagonal half of the image is transparent.

So far so good? I hope your algebra and graphing lessons from when you were 12 are coming back.

Draw a circle in FFMPEG

Lets attempt something more complicated and try to depict a circumvolve in ffmpeg with a radius of 100 pixels. But earlier we go into this let's go back to form 7 math once more because we demand to remind ourselves almost the identities related to circles.

A circle's radius \(R\) is described by Pythagoras:

\[Ten^two + Y^2 = R^2\]So if we want to draw a circle we just need to set whatsoever pixel opaque where:

\[\sqrt{X^two + Y^2} \leq R\]Likewise practice you remember my table of fancy expressions bachelor in ffmpeg? Specifically, nosotros should use hypot(A, B) as part of our command, so information technology should look a bit like this:

ffmpeg -f lavfi -i colour=color=cherry-red:size=228x228,format=yuva420p,^ geq=lum='p(Ten,Y)':a='if(lte(hypot(X,Y),100),255,0)' ^ -t twenty -c:5 vp9 redcircle20-i.webm Well, that doesn't look correct. What have we done wrong? Well we've fatigued the circle around the origin of the our coordinate base so we end upwards missing 75% of our circumvolve! Nosotros need to translate our circle over a smidge, so lets figure out the math behind translating a circumvolve.

It turns out that a quick read of my form 3 math textbook indicates that the origin of a circle can be translated to any point \((A,B)\) with the post-obit identity:

\[(10-A)^2 + (Y-B)^2 = R^2\]And then far then good, and if we desire to identify our circumvolve in the center of our canvas we need to use \(A=\frac{W}{ii}\), \(B=\frac{H}{2}\) which leads to:

\[\sqrt{(X-\frac{W}{2})^2 + (Y-\frac{H}{2})^2} \leq R\]Adapting that to our ffmpeg control nosotros can move the origin to the centre of our canvas similar this:

ffmpeg -f lavfi -i color=color=red:size=228x228,format=yuva420p,^ geq=lum='p(X,Y)':a='if(lte(hypot(X-(W/ii),Y-(H/ii)),100),255,0)' ^ -t 20 -c:5 vp9 redcircle20.webm Tada!

Alpha Masking with FFMPEG

Ok kids, we're finally getting to the meat and potatoes of the commodity. Nosotros now sympathize the basics behind drawing shapes, and we desire to use those shapes equally masks to make part of a video transparent. Do you remember that image of the raw map from my classic figurer game? Well, lets take what we accept learned to mask out a circumvolve in that map video

We volition need two new ffmpeg filters alphaextract and alphamerge. The alphaextract filter takes the alpha component and outputs grayscale, the alphamerge filter takes grayscale input and a target video source and masks the target.

Usage of these commands looks a little bit like this.

ffmpeg -f lavfi -i color=color=red:size=228x228,format=yuva420p,^ geq=lum='p(Ten,Y)':a='if(lte(hypot(X-(W/two),Y-(H/two)),100),255,0)' ^ -i map.mp4 ^ -filter_complex "[0]alphaextract[a];[1][a]alphamerge" ^ -c:v vp9 maskedmap.webm This is how yous add together a circular mask over a video with ffmpeg! Cool.

Finishing touches

Nosotros still are not quite finished, as you may accept noticed I did non accept the fourth dimension to feather the alpha mask on the map video above and so the edges of the video are quite precipitous. I could have written an expression to soften the edge of the mask, and I may testify how to do that in a time to come commodity, simply in this case I desire to actually use some other effect to my map entirely.

I want to accept this prototype that I drew in CorelDRAW using my vast experience of occasionally making posters once every one-half decade and overlay it onto our masked map to create a more polished final result.

ffmpeg -f lavfi -i color=colour=red:size=228x228,format=yuva420p,^ geq=lum='p(10,Y)':a='if(lte(hypot(X-(Due west/ii),Y-(H/ii)),ninety),255,0)' ^ -i map.mp4 ^ -i mapcompass-1.png -loop 1 ^ -filter_complex "[0]alphaextract[a];[one][a]alphamerge[mask];[mask][ii]overlay" ^ -c:five vp9 finishedmap.webm The result looks like this:

Summary

What should y'all accept away from this extensive commodity? Well, for ane thing I hope that I have demonstrated that you lot tin can create decent composite video with ffmpeg. Certainly the user interface is not fluid or piece of cake to understand like the more robust tools (eg. Davinci Fusion or Afterwards Effects), but with patience you can admittedly create decent effects. Moreover, the platform is admittedly stable in comparison to Resolve, which as I mentioned is completely hideous to use on my figurer.

I think that scripted systems similar the one nosotros created here today truly have their place in treatment pre-rendered scenes and animations. I don't take any idea how much value this kind of documentation is to the world at large, since I am adequately certain that people by and large simply desire to use a point and click surroundings to produce their whirlywoos simply I'm glad that I took the time to write everything downwardly here. I know I will personally be using scripts like the one we created.

Thanks for reading! Also take some time and watch my derpy YouTube stuff to come across how it all turns out.

Source: https://curiosalon.github.io/blog/ffmpeg-alpha-masking/

0 Response to "Draw a Circle on a Tif"

Post a Comment